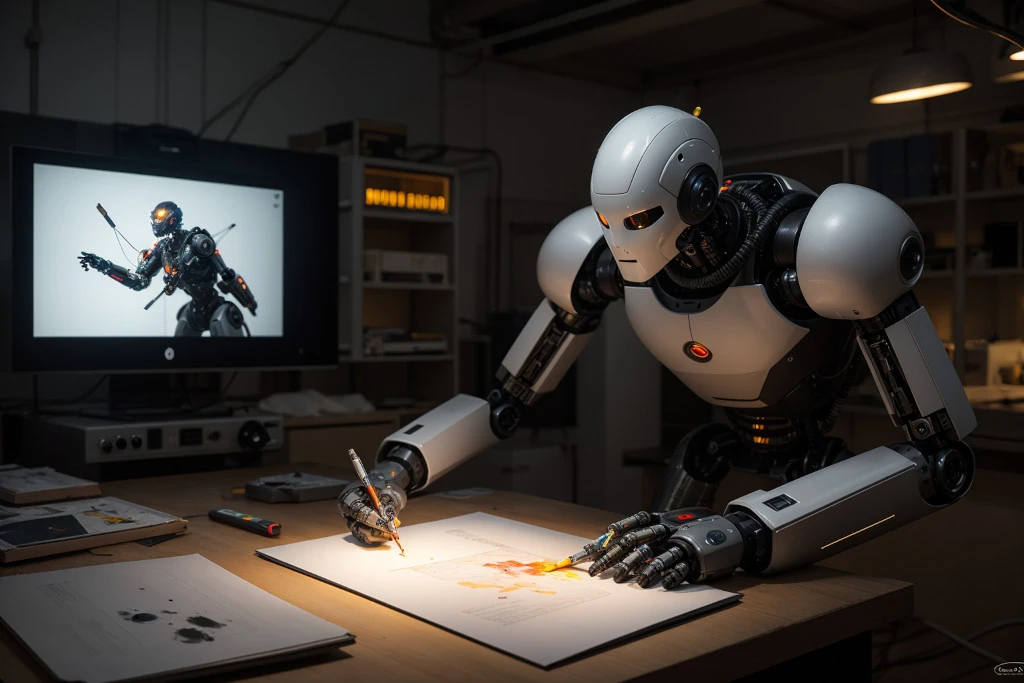

Text Genretion

Text generation is a fascinating field within artificial intelligence (AI) and natural language processing (NLP) that focuses on creating human-like text using machine learning algorithms and models. These models are trained on vast amounts of text data and learn to generate new text based on the patterns, styles, and structures present in the training data. Text generation has numerous applications across various domains, including creative writing, content creation, dialogue systems, language translation, and more.

what is Text Generation

Techniques and Algorithms: Text generation techniques range from traditional rule-based systems to advanced deep learning models. Some common approaches include: Markov models: These models use probabilistic methods to predict the next word in a sequence based on the preceding words. Recurrent Neural Networks (RNNs): RNNs are a type of neural network architecture designed to process sequential data. They have been widely used for text generation tasks due to their ability to capture long-range dependencies in the input data. Generative Adversarial Networks (GANs): GANs consist of two neural networks, a generator and a discriminator, which are trained adversarially to generate realistic text samples. Transformers: Transformer models, such as OpenAI's GPT (Generative Pre-trained Transformer) series, have achieved state-of-the-art performance in text generation tasks. They use attention mechanisms to capture global dependencies in the input text and generate coherent and contextually relevant outputs.

OpenAI is an artificial intelligence research laboratory focused on advancing the field of artificial general intelligence (AGI) while ensuring that its benefits are shared fairly among humanity